Use Linux Traffic Control as impairment node in a test environment (part 2)

Rigorously testing a network device or distributed service requires complex, realistic network test environments. Linux Traffic Control (tc) with Network Emulation (netem) provides the building blocks to create an impairment node that simulates such networks.

This three-part series describes how an impairment node can be set up using Linux Traffic Control. In the first post, Linux Traffic control and its queuing disciplines were introduced. This second part shows which traffic control configurations are available to impair traffic and how to use them. The third and last part will describe how to get an impairment node up and running!

Recap

The previous post introduced Linux traffic control and the queuing disciplines that define its behavior. It also described what the default qdisc configuration of a Linux interface looks like. Finally, it showed how this default configuration can be replaced by a hierarchy of custom queuing disciplines.

Our goal is still to create an Impairment Node device that manipulates traffic between two of its Ethernet interfaces eth0 and eth1, while managing it from a third interface (e.g. eth2).

Configuring traffic impairments

To impair traffic leaving interface eth0, we replace the default root queuing discipline with one of our own. Note that for a symmetrical impairment, the same must be done on the other interface eth1!

Adding a new qdisc to the root of an interface’s qdisc hierarchy (using tc qdisc add) actually replaces the default configuration. Deleting a custom configuration (using tc qdisc del) actually replaces it with the default.

While changing a qdisc directly (using tc qdisc change or tc qdisc replace) is also possibly, the examples below start from a clean slate.

Available impairment configurations

So which kinds of impairments are available to simulate network impairments? Let’s have a look!

Caveat: It is important to note that Traffic Control uses quite odd units. For instance, the kbps unit denotes kibibytes per second. This means that one kbps equals 1024 bytes per second instead of (the expected) 1000 bits per second. On the other hand, kibibit (for data) and kibibit per second (for data rate) are both represented by the unit kbit. For a full overview, see the UNITS section of the tc man page or this faq post.

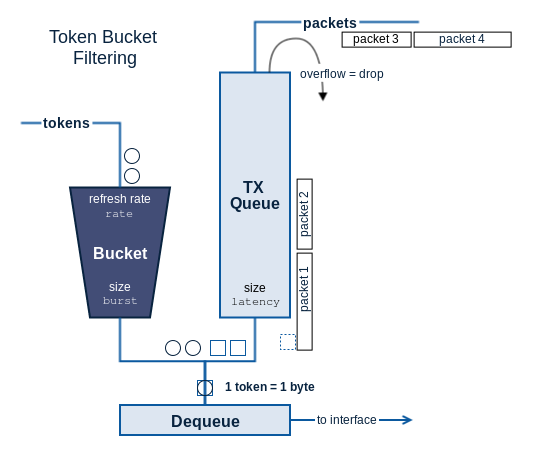

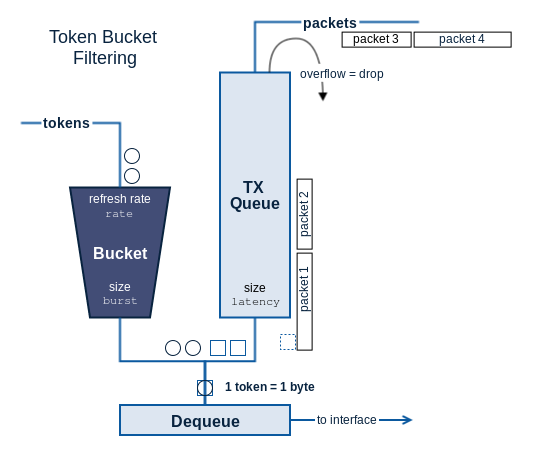

Throughput

To limit the outgoing traffic on an interface, we can use the Token Bucket Filter or tbf qdisc (man page, read more, picture). In the tbf queuing discipline each outgoing traffic byte is serviced by a single token. These tokens are refreshed at the desired output rate. Tokens are saved up in a bucket of limited size, so smaller bursts of traffic can still be handled at a higher rate.

This qdisc is typically used to impose a soft limit on the traffic, which allows limited burst to be sent at line rate, while still respecting the specified rate on average.

$ tc qdisc add dev eth0 root tbf rate 1mbit burst 32kbit latency 400ms

Set the root queuing discipline of eth0 to tbf, with the output rate limited to 1 Mbps. Allows bursts of up to 32kbit to be sent at maximum rate. Packets accumulating a latency of over 400 ms due to the rate limitation are dropped.

Using extra options we can limit the peak rate at which bursts themselves are handled. In other words, we can configure the speed at which the bucket gets emptied (read more, picture). By using the same value for both rate and peakrate, we can even put a hard throughput limit on the output.

$ tc qdisc add dev eth1 root tbf rate 1mbit burst 32kbit latency 200ms \ peakrate 1mbit minburst 1520

Set the root queuing discipline of eth1 to tbf, with the output rate limited to 1 Mbps. Since bursts are also transmitted at a peakrate of 1 Mbps, this is a hard limit. However, allow a minburst of 1520 bytes to be sent at maximum rate, so a typical packet can be sent at once (note 1514 bytes is a typical layer 2 MTU). Packets accumulating a latency of over 200 ms due to the rate limitation are dropped.

Latency and jitter

Using the netem qdisc we can emulate network latency and jitter on all outgoing packets (man page, read more). Some examples:

$ tc qdisc add dev eth0 root netem delay 100ms

<delay packets for 100ms>

$ tc qdisc add dev eth0 root netem delay 100ms 10ms

<delay packets with value from uniform [90ms-110ms] distribution>

$ tc qdisc add dev eth0 root netem delay 100ms 10ms 25%

<delay packets with value from uniform [90ms-110ms] distribution and 25% \

correlated with value of previous packet>

$ tc qdisc add dev eth0 root netem delay 100ms 10ms distribution normal

<delay packets with value from normal distribution (mean 100ms, jitter 10ms)>

$ tc qdisc add dev eth0 root netem delay 100ms 10ms 25% distribution normal

<delay packets with value from normal distribution (mean 100ms, jitter 10ms) \

and 25% correlated with value of previous packet>

Packet loss

Using the netem qdisc packet loss can be emulated as well (man page, read more). Some simple examples:

$ tc qdisc add dev eth0 root netem loss 0.1%

<drop packets randomly with probability of 0.1%>

$ tc qdisc add dev eth0 root netem loss 0.3% 25%

<drop packets randomly with probability of 0.3% and 25% correlated with drop \

decision for previous packet>

But netem can even emulate more complex loss mechanism, such as the Gilbert-Elliot scheme. This scheme defines 2 states Good (or drop Gap) and Bad (or drop Burst). The drop chances of both states and the chances of switching between states are all provided. See section 3 of this paper for more info.

$ tc qdisc add dev eth0 root netem loss gemodel 1% 10% 70% 0.1%

<drop packets using Gilbert-Elliot scheme with probabilities \

move-to-burstmode (p) of 1%, move-to-gapmode (r) of 10%, \

drop-in-burstmode (1-h) of 70% and drop-in-gapmode (1-k) of 0.1%>

Duplication and corruption

Packet duplication and corruption is also possible with netem (man page, read more). The examples are self explaining.

$ tc qdisc add dev eth0 root netem duplicate <chance> [<correlation>] $ tc qdisc add dev eth0 root netem corrupt <chance> [<correlation>]

Reordering

Finally, netem allows packets to be reordered (man page, read more). This is achieved by holding some packets back for a specified amount of time. In other words, reordering will only occur if the interval between packets is smaller than the configured delay!

Combining multiple impairments

Configuring a single impairment is useful for debugging, but to emulate real networks, multiple impairments often need to be active at the same time.

Multiple netem impairments can be combined into a single qdisc, as shown in the following example.

$ tc qdisc add dev eth2 root netem delay 10ms reorder 25% 50% loss 0.2

To combine these impairments with rate limitation, we need to chain the tbf and netem qdiscs. This process is described in the first post of this series. For example, it would lead to the following commands:

$ tc qdisc del dev eth0 root $ tc qdisc add dev eth0 root handle 1: netem delay 10ms reorder 25% 50% loss 0.2% $ tc qdisc add dev eth0 parent 1: handle 2: tbf rate 1mbit burst 32kbit latency 400ms $ tc qdisc show dev eth0 qdisc netem 1: root refcnt 2 limit 1000 delay 10.0ms loss 0.2% reorder 25% 50% gap 1 qdisc tbf 2: parent 1: rate 1000Kbit burst 4Kb lat 400.0ms

Simply switch the two qdiscs to have the packet’s rate limited first and the impairment applied afterwards.

Towards an impairment node

All buildings blocks are now in place to configure an actual impairment node. This is the subject of the third and last blog post in this series!

I went through your blog and it is easy to follow and understand. However, I have one question, how can we add bandwidth and jitter using tc in a uniform distribution? ((For example, bandwidth 100MB and jitter=0.5ms, delay=10ms)

This is explained in the “combining multiple impairments” section. You need to chain a rate limiting qdisc and the latency impairment together.

So for your example this would become:

$ tc qdisc add dev eth0 root handle 1: netem delay 10ms 1ms

$ tc qdisc add dev eth0 parent 1: handle 2: tbf rate 100mbps burst 32kbit latency 400ms

Hope this helps!

hello,would it be possible that this command support pocket drop on certain eth?

my setup

router <-----> PC(using eth3)

I want to have pocket drop on eth3,

right now we use a machine(sprint machine) to simulate pocket drop

tnx

This is indeed possible, if your PC is running a linux operating system.

On the Linux PC, you would have to use something like the following command (for a 0.5% drop):

tc qdisc add dev eth3 root netem loss 0.5%